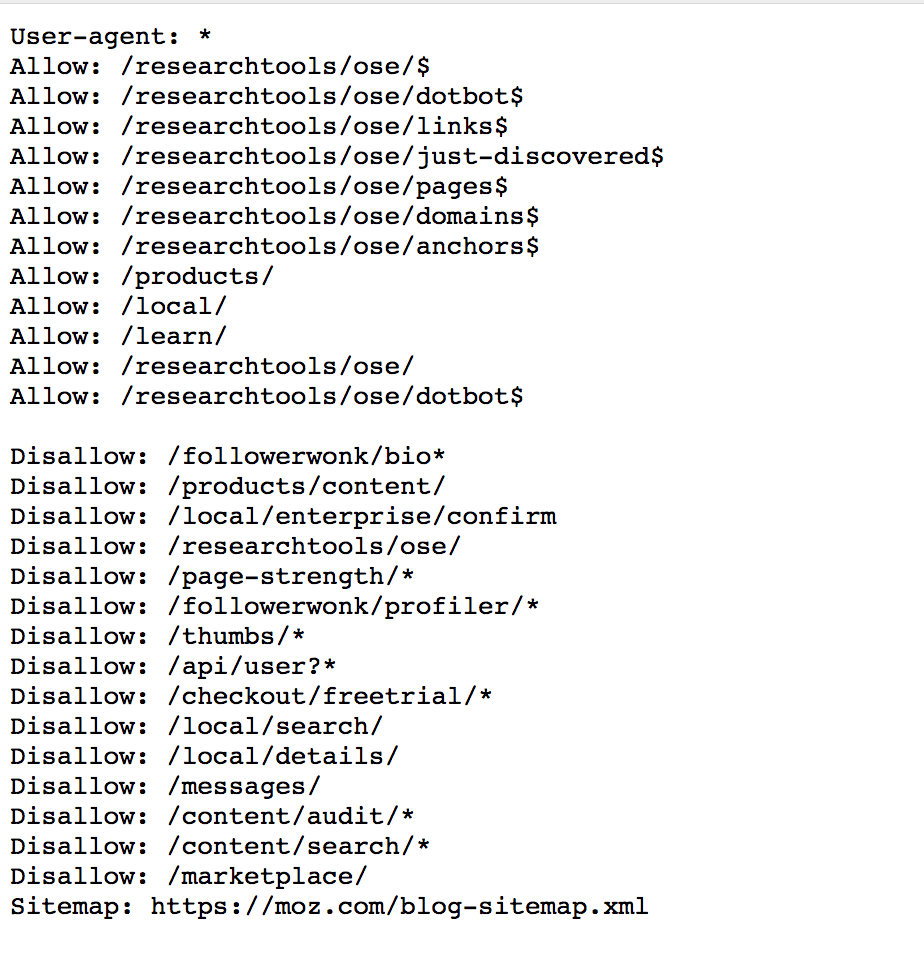

Disallow All Pages Except Robots.txt - Another possibility would be to not use robots.txt at all, but to place the following meta tag in the of every page except. Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your website's seo and indexing. For details, see google's documentation of their supported. The original robots.txt specification says that crawlers should read robots.txt from top to bottom, and use the first matching rule. For google, in particular, the following rules will do the trick: * means this section applies to all robots. / tells the robot that it should not visit any pages on the site.

For google, in particular, the following rules will do the trick: The original robots.txt specification says that crawlers should read robots.txt from top to bottom, and use the first matching rule. / tells the robot that it should not visit any pages on the site. Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your website's seo and indexing. * means this section applies to all robots. Another possibility would be to not use robots.txt at all, but to place the following meta tag in the of every page except. For details, see google's documentation of their supported.

Another possibility would be to not use robots.txt at all, but to place the following meta tag in the of every page except. For details, see google's documentation of their supported. For google, in particular, the following rules will do the trick: Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your website's seo and indexing. / tells the robot that it should not visit any pages on the site. * means this section applies to all robots. The original robots.txt specification says that crawlers should read robots.txt from top to bottom, and use the first matching rule.

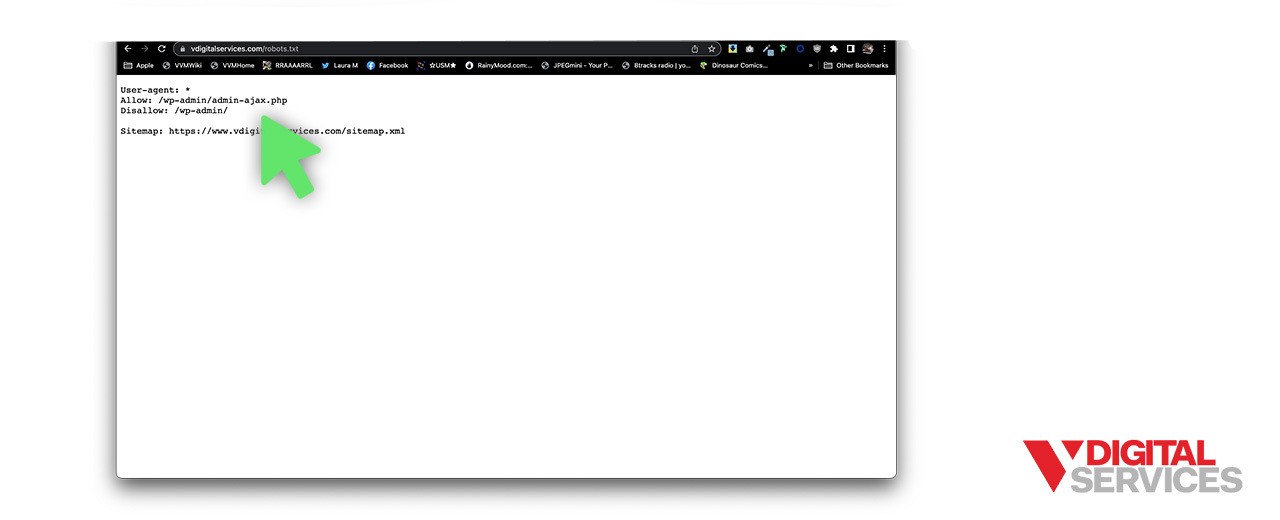

How To Disallow Specific Pages In Robots.txt? (+ 9 More Use Cases)

/ tells the robot that it should not visit any pages on the site. For details, see google's documentation of their supported. Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your website's seo and indexing. * means this section applies to all robots. For google, in particular, the following rules will do.

Guide To Robots.txt Disallow Command For SEO

Another possibility would be to not use robots.txt at all, but to place the following meta tag in the of every page except. For details, see google's documentation of their supported. Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your website's seo and indexing. For google, in particular, the following rules will.

wordpress Disallow all pagination pages in robots.txt Stack Overflow

Another possibility would be to not use robots.txt at all, but to place the following meta tag in the of every page except. Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your website's seo and indexing. For details, see google's documentation of their supported. * means this section applies to all robots..

Using Robots.txt to Disallow All or Allow All How to Guide

For details, see google's documentation of their supported. For google, in particular, the following rules will do the trick: Another possibility would be to not use robots.txt at all, but to place the following meta tag in the of every page except. Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your website's.

Fleur de ville je suis fière Pessimiste robots txt disallow empty

Another possibility would be to not use robots.txt at all, but to place the following meta tag in the of every page except. * means this section applies to all robots. For details, see google's documentation of their supported. / tells the robot that it should not visit any pages on the site. The original robots.txt specification says that crawlers.

robots.txt Disallow All Block Bots

For google, in particular, the following rules will do the trick: / tells the robot that it should not visit any pages on the site. Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your website's seo and indexing. The original robots.txt specification says that crawlers should read robots.txt from top to bottom,.

How to Create a Robots.txt File for SEO

The original robots.txt specification says that crawlers should read robots.txt from top to bottom, and use the first matching rule. For details, see google's documentation of their supported. For google, in particular, the following rules will do the trick: Another possibility would be to not use robots.txt at all, but to place the following meta tag in the of every.

What is the Disallow in Robots.txt? WP Educator

* means this section applies to all robots. The original robots.txt specification says that crawlers should read robots.txt from top to bottom, and use the first matching rule. For google, in particular, the following rules will do the trick: Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your website's seo and indexing..

wordpress Disallow all pagination pages in robots.txt Stack Overflow

The original robots.txt specification says that crawlers should read robots.txt from top to bottom, and use the first matching rule. Another possibility would be to not use robots.txt at all, but to place the following meta tag in the of every page except. Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your.

Using Robots.txt to Disallow All or Allow All How to Guide

* means this section applies to all robots. Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your website's seo and indexing. For google, in particular, the following rules will do the trick: / tells the robot that it should not visit any pages on the site. Another possibility would be to not.

The Original Robots.txt Specification Says That Crawlers Should Read Robots.txt From Top To Bottom, And Use The First Matching Rule.

* means this section applies to all robots. For details, see google's documentation of their supported. Learn how to disallow all pages except robots.txt using the robots.txt file and how it impacts your website's seo and indexing. For google, in particular, the following rules will do the trick:

/ Tells The Robot That It Should Not Visit Any Pages On The Site.

Another possibility would be to not use robots.txt at all, but to place the following meta tag in the of every page except.